Light Cavities

Light Cavities

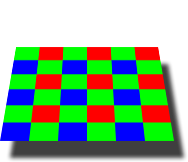

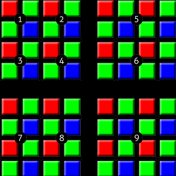

However, the above illustration would only create grayscale images, since these cavities are unable to distinguish how much they have of each color. To capture color images, a filter has to be placed over each cavity that permits only particular colors of light. Virtually all current digital cameras can only capture one of three primary colors in each cavity, and so they discard roughly 2/3 of the incoming light. As a result, the camera has to approximate the other two primary colors in order to have full color at every pixel. The most common type of color filter array is called a "Bayer array, " shown below.

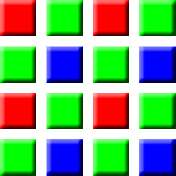

Color Filter ArrayPhotosites with Color Filters A Bayer array consists of alternating rows of red-green and green-blue filters. Notice how the Bayer array contains twice as many green as red or blue sensors. Each primary color does not receive an equal fraction of the total area because the human eye is more sensitive to green light than both red and blue light. Redundancy with green pixels produces an image which appears less noisy and has finer detail than could be accomplished if each color were treated equally. This also explains why noise in the green channel is much less than for the other two primary colors (see "Understanding Image Noise" for an example).

A Bayer array consists of alternating rows of red-green and green-blue filters. Notice how the Bayer array contains twice as many green as red or blue sensors. Each primary color does not receive an equal fraction of the total area because the human eye is more sensitive to green light than both red and blue light. Redundancy with green pixels produces an image which appears less noisy and has finer detail than could be accomplished if each color were treated equally. This also explains why noise in the green channel is much less than for the other two primary colors (see "Understanding Image Noise" for an example).

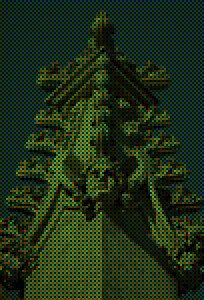

(shown at 200%)What Your Camera Sees

(through a Bayer array)

Note: Not all digital cameras use a Bayer array, however this is by far the most common setup. For example, the Foveon sensor captures all three colors at each pixel location, whereas other sensors might capture four colors in a similar array: red, green, blue and emerald green.

Note: Not all digital cameras use a Bayer array, however this is by far the most common setup. For example, the Foveon sensor captures all three colors at each pixel location, whereas other sensors might capture four colors in a similar array: red, green, blue and emerald green.

BAYER DEMOSAICING

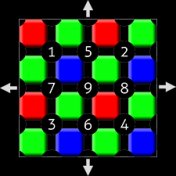

Bayer "demosaicing" is the process of translating this Bayer array of primary colors into a final image which contains full color information at each pixel. How is this possible if the camera is unable to directly measure full color? One way of understanding this is to instead think of each 2x2 array of red, green and blue as a single full color cavity.

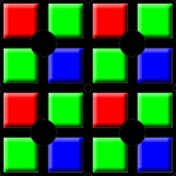

This would work fine, however most cameras take additional steps to extract even more image information from this color array. If the camera treated all of the colors in each 2x2 array as having landed in the same place, then it would only be able to achieve half the resolution in both the horizontal and vertical directions. On the other hand, if a camera computed the color using several overlapping 2x2 arrays, then it could achieve a higher resolution than would be possible with a single set of 2x2 arrays. The following combination of overlapping 2x2 arrays could be used to extract more image information.

This would work fine, however most cameras take additional steps to extract even more image information from this color array. If the camera treated all of the colors in each 2x2 array as having landed in the same place, then it would only be able to achieve half the resolution in both the horizontal and vertical directions. On the other hand, if a camera computed the color using several overlapping 2x2 arrays, then it could achieve a higher resolution than would be possible with a single set of 2x2 arrays. The following combination of overlapping 2x2 arrays could be used to extract more image information.

Note how we did not calculate image information at the very edges of the array, since we assumed the image continued in each direction. If these were actually the edges of the cavity array, then calculations here would be less accurate, since there are no longer pixels on all sides. This is typically negligible though, since information at the very edges of an image can easily be cropped out for cameras with millions of pixels.

Other demosaicing algorithms exist which can extract slightly more resolution, produce images which are less noisy, or adapt to best approximate the image at each location.

YOU MIGHT ALSO LIKE

![[PDF Download] Understanding Digital Photography [Read] Online](/img/video/pdf_download_understanding_digital_photography_read.jpg)